mirror of https://github.com/alibaba/MNN.git

Merge pull request #3087 from alibaba/feature/sync

MNN:Sync: Sync Internal 3.0.0

This commit is contained in:

commit

e460135a0a

|

|

@ -24,6 +24,7 @@ out/

|

|||

.gradle

|

||||

.gradle/

|

||||

build/

|

||||

buildvisionOs/

|

||||

|

||||

# Signing files

|

||||

.signing/

|

||||

|

|

|

|||

|

|

@ -73,7 +73,7 @@ option(MNN_SUPPORT_BF16 "Enable MNN's bf16 op" OFF)

|

|||

option(MNN_LOW_MEMORY "Build MNN support low memory for weight quant model." OFF)

|

||||

option(MNN_CPU_WEIGHT_DEQUANT_GEMM "Build MNN CPU weight dequant related gemm kernels." OFF)

|

||||

|

||||

IF (OHOS)

|

||||

IF (OHOS AND MNN_INTERNAL)

|

||||

include($ENV{NODE_PATH}/@ali/tcpkg/tcpkg.cmake)

|

||||

export_headers(DIR ${CMAKE_SOURCE_DIR}/include/MNN)

|

||||

IF (MNN_BUILD_OPENCV)

|

||||

|

|

@ -209,6 +209,7 @@ option(MNN_VULKAN "Enable Vulkan" OFF)

|

|||

option(MNN_ARM82 "Enable ARMv8.2's FP16 Compute" ON)

|

||||

option(MNN_KLEIDIAI "Enable KLEIDIAI" OFF)

|

||||

option(MNN_ONEDNN "Enable oneDNN" OFF)

|

||||

option(MNN_AVX2 "Open AVX2 Compile for x86 if possible" ON)

|

||||

option(MNN_AVX512 "Enable AVX512" OFF)

|

||||

option(MNN_CUDA "Enable CUDA" OFF)

|

||||

option(MNN_TENSORRT "Enable TensorRT" OFF)

|

||||

|

|

@ -312,6 +313,9 @@ IF(MNN_DEBUG_MEMORY)

|

|||

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -fsanitize=address")

|

||||

endif()

|

||||

|

||||

set(MNN_DEPS "")

|

||||

set(MNN_EXTRA_DEPENDS "")

|

||||

|

||||

IF(CMAKE_BUILD_TYPE MATCHES Debug)

|

||||

add_definitions(-DMNN_DEBUG -DDEBUG)

|

||||

if(MSVC)

|

||||

|

|

@ -337,6 +341,13 @@ else()

|

|||

endif()

|

||||

endif()

|

||||

ENDIF(CMAKE_BUILD_TYPE MATCHES Debug)

|

||||

if(OHOS)

|

||||

IF(MNN_USE_LOGCAT)

|

||||

add_definitions(-DMNN_USE_LOGCAT)

|

||||

add_definitions(-Wno-format-security)

|

||||

list(APPEND MNN_EXTRA_DEPENDS libhilog_ndk.z.so)

|

||||

ENDIF()

|

||||

endif()

|

||||

if(CMAKE_SYSTEM_NAME MATCHES "^Android")

|

||||

IF(MNN_USE_LOGCAT)

|

||||

add_definitions(-DMNN_USE_LOGCAT)

|

||||

|

|

@ -456,8 +467,6 @@ IF(MNN_BUILD_LLM)

|

|||

ENDIF()

|

||||

|

||||

|

||||

set(MNN_DEPS "")

|

||||

set(MNN_EXTRA_DEPENDS "")

|

||||

|

||||

# Add Thread dependency

|

||||

find_package(Threads)

|

||||

|

|

@ -505,13 +514,11 @@ if (NOT MSVC)

|

|||

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -fstrict-aliasing -ffunction-sections -fdata-sections -ffast-math -fno-rtti -fno-exceptions ")

|

||||

set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -fstrict-aliasing -ffunction-sections -fdata-sections -ffast-math")

|

||||

else()

|

||||

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} /fp:fast")

|

||||

set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} /fp:fast")

|

||||

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} /fp:precise")

|

||||

set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} /fp:precise")

|

||||

endif()

|

||||

|

||||

# Metal

|

||||

set(MNN_DEPS "")

|

||||

set(MNN_EXTRA_DEPENDS "")

|

||||

list(APPEND MNN_DEPS MNN)

|

||||

|

||||

# Plugin

|

||||

|

|

@ -531,14 +538,10 @@ endif()

|

|||

# CoreML

|

||||

IF(MNN_COREML)

|

||||

add_definitions(-DMNN_COREML_ENABLED=1)

|

||||

add_subdirectory(${CMAKE_CURRENT_LIST_DIR}/source/backend/coreml/)

|

||||

include(${CMAKE_CURRENT_LIST_DIR}/source/backend/coreml/CMakeLists.txt)

|

||||

|

||||

IF(MNN_SEP_BUILD)

|

||||

list(APPEND MNN_DEPS MNNCoreML)

|

||||

list(APPEND MNN_EXTRA_DEPENDS MNNCoreML)

|

||||

ELSE()

|

||||

list(APPEND MNN_TARGETS MNNCoreML)

|

||||

list(APPEND MNN_OBJECTS_TO_LINK $<TARGET_OBJECTS:MNNCoreML>)

|

||||

ENDIF()

|

||||

|

||||

find_library(COREML CoreML)

|

||||

find_library(FOUNDATION Foundation)

|

||||

|

|

@ -639,7 +642,7 @@ ELSE()

|

|||

ENDIF()

|

||||

|

||||

# Model Internal. Enable MNN internal features such as model authentication and metrics logging.

|

||||

if (MNN_INTERNAL)

|

||||

if (MNN_INTERNAL AND NOT OHOS) # TODO: support OHOS logging

|

||||

target_compile_options(MNNCore PRIVATE -DMNN_INTERNAL_ENABLED)

|

||||

target_compile_options(MNN_Express PRIVATE -DMNN_INTERNAL_ENABLED)

|

||||

include(${CMAKE_CURRENT_LIST_DIR}/source/internal/logging/CMakeLists.txt)

|

||||

|

|

|

|||

|

|

@ -7,6 +7,10 @@

|

|||

## Intro

|

||||

MNN is a highly efficient and lightweight deep learning framework. It supports inference and training of deep learning models and has industry-leading performance for inference and training on-device. At present, MNN has been integrated into more than 30 apps of Alibaba Inc, such as Taobao, Tmall, Youku, DingTalk, Xianyu, etc., covering more than 70 usage scenarios such as live broadcast, short video capture, search recommendation, product searching by image, interactive marketing, equity distribution, security risk control. In addition, MNN is also used on embedded devices, such as IoT.

|

||||

|

||||

[MNN-LLM](https://github.com/alibaba/MNN/tree/master/transformers/llm) is a large language model runtime solution developed based on the MNN engine. The mission of this project is to deploy LLM models locally on everyone's platforms(Mobile Phone/PC/IOT). It supports popular large language models such as Qianwen, Baichuan, Zhipu, LLAMA, and others. [MNN-LLM User guide](https://mnn-docs.readthedocs.io/en/latest/transformers/llm.html)

|

||||

|

||||

[MNN-Diffusion](https://github.com/alibaba/MNN/tree/master/transformers/diffusion) is a stable diffusion model runtime solution developed based on the MNN engine. The mission of this project is to deploy stable diffusion models locally on everyone's platforms. [MNN-Diffusion User guide](https://mnn-docs.readthedocs.io/en/latest/transformers/diffusion.html)

|

||||

|

||||

|

||||

|

||||

Inside Alibaba, [MNN](https://mp.weixin.qq.com/s/5I1ISpx8lQqvCS8tGd6EJw) works as the basic module of the compute container in the [Walle](https://mp.weixin.qq.com/s/qpeCETty0BqqNJV9CMJafA) System, the first end-to-end, general-purpose, and large-scale production system for device-cloud collaborative machine learning, which has been published in the top system conference OSDI’22. The key design principles of MNN and the extensive benchmark testing results (vs. TensorFlow, TensorFlow Lite, PyTorch, PyTorch Mobile, TVM) can be found in the OSDI paper. The scripts and instructions for benchmark testing are put in the path “/benchmark”. If MNN or the design of Walle helps your research or production use, please cite our OSDI paper as follows:

|

||||

|

|

@ -26,7 +30,9 @@ Inside Alibaba, [MNN](https://mp.weixin.qq.com/s/5I1ISpx8lQqvCS8tGd6EJw) works a

|

|||

|

||||

|

||||

## Documentation and Workbench

|

||||

MNN's docs are in place in [Yuque docs here](https://www.yuque.com/mnn/en) and [Read the docs](https://mnn-docs.readthedocs.io/en/latest).

|

||||

MNN's docs are in place in [Read the docs](https://mnn-docs.readthedocs.io/en/latest).

|

||||

|

||||

You can also read docs/README to build docs's html.

|

||||

|

||||

MNN Workbench could be downloaded from [MNN's homepage](http://www.mnn.zone), which provides pretrained models, visualized training tools, and one-click deployment of models to devices.

|

||||

|

||||

|

|

|

|||

|

|

@ -6,6 +6,10 @@

|

|||

|

||||

[MNN](https://github.com/alibaba/MNN)是一个轻量级的深度神经网络引擎,支持深度学习的推理与训练。适用于服务器/个人电脑/手机/嵌入式各类设备。目前,MNN已经在阿里巴巴的手机淘宝、手机天猫、优酷等30多个App中使用,覆盖直播、短视频、搜索推荐、商品图像搜索、互动营销、权益发放、安全风控等场景。

|

||||

|

||||

[MNN-LLM](https://github.com/alibaba/MNN/tree/master/transformers/llm)是基于MNN引擎开发的大语言模型运行方案,解决大语言模型在本地设备的高效部署问题(手机/个人电脑/嵌入式设备)。支持常见的千问/百川/智谱/LLAMA等大语言模型。使用教程:[MNN-LLM使用教程](https://mnn-docs.readthedocs.io/en/latest/transformers/llm.html)

|

||||

|

||||

[MNN-Diffusion](https://github.com/alibaba/MNN/tree/master/transformers/diffusion)是基于MNN引擎开发的Stable Diffusion文生图模型运行方案,解决Stable Diffusion模型在本地设备的高效部署问题。使用教程:[MNN-Diffusion使用教程](https://mnn-docs.readthedocs.io/en/latest/transformers/diffusion.html)

|

||||

|

||||

|

||||

|

||||

在阿里巴巴中,[MNN](https://mp.weixin.qq.com/s/5I1ISpx8lQqvCS8tGd6EJw)被用作为[Walle](https://mp.weixin.qq.com/s/qpeCETty0BqqNJV9CMJafA)系统中计算容器的基础模块。Walle是首个端到端、通用型、规模化产业应用的端云协同机器学习系统,发表于操作系统顶会OSDI 2022。Walle的论文中解释了MNN的关键设计理念,并提供了MNN相对于其他深度学习框架(TensorFlow, TensorFlow Lite, PyTorch, PyTorch Mobile, TVM)的benchmark测试结果。相关测试脚本和说明文档被放在“/benchmark”目录下。如果MNN或Walle的设计对你的研究或生产有所助益,欢迎引用我们的OSDI论文:

|

||||

|

|

@ -26,7 +30,9 @@

|

|||

## 文档与工作台

|

||||

MNN文档:

|

||||

- [最新文档(readthedocs)](https://mnn-docs.readthedocs.io/en/latest/index.html)

|

||||

- [语雀文档](https://www.yuque.com/mnn/cn)

|

||||

|

||||

- 也可阅读 docs/README ,编译本地文档

|

||||

|

||||

|

||||

[MNN官网](http://www.mnn.zone)上还可以下载MNN团队全新力作MNN工作台,涵盖开箱即用模型、可视化训练等工具,更可以一键部署到多端设备。

|

||||

|

||||

|

|

|

|||

|

|

@ -40,7 +40,8 @@ MNN使用CMake构建项目,CMake中的宏定义列表如下:

|

|||

| MNN_VULKAN | 是否构建`Vulkan`后端,默认为`OFF` |

|

||||

| MNN_ARM82 | 编译ARM架构时,是否构建`Armv8.2`后端,以支持FP16计算,默认为`ON` |

|

||||

| MNN_ONEDNN | 是否使用`oneDNN`,默认为`OFF` |

|

||||

| MNN_AVX512 | 是否构建`avx512`后端,默认为`OFF` |

|

||||

| MNN_AVX2 | 在`MNN_USE_SSE`开启的基础上,是否增加AVX2指令的支持,默认为`ON` |

|

||||

| MNN_AVX512 | 在`MNN_USE_SSE`和`MNN_AVX2`开启的基础上,是否增加`avx512`指令集的支持,默认为`OFF` |

|

||||

| MNN_CUDA | 是否构建`Cuda`后端,默认为`OFF` |

|

||||

| MNN_CUDA_PROFILE | 是否打开CUDA profile工具,默认为`OFF` |

|

||||

| MNN_CUDA_QUANT | 是否打开CUDA 量化文件编译,默认为`OFF` |

|

||||

|

|

@ -85,3 +86,4 @@ MNN使用CMake构建项目,CMake中的宏定义列表如下:

|

|||

| MNN_SUPPORT_TRANSFORMER_FUSE | 是否支持Fuse Transformer相关OP实现,默认为 `OFF` |

|

||||

| MNN_BUILD_LLM | 是否构建基于MNN的llm库和demo,默认为`OFF` |

|

||||

| MNN_BUILD_DIFFUSION | 是否构建基于MNN的diffusion demo,需要打开MNN_BUILD_OPENCV和MNN_IMGCODECS宏使用 默认为`OFF` |

|

||||

| MNN_KLEIDIAI | 是否集成ARM的klediAI加速库【目前处于实验状态,只能跑对称量化的LLM模型】,默认为`OFF` |

|

||||

|

|

|

|||

|

|

@ -1,17 +1,17 @@

|

|||

# 主库编译

|

||||

默认编译产物为:`libMNN.so`,`express/libMNN_Express.so`

|

||||

## Linux/MacOS

|

||||

- 环境要求

|

||||

### 环境要求

|

||||

- cmake >= 3.10

|

||||

- gcc >= 4.9 或者使用 clang

|

||||

- 相关编译选项

|

||||

### 相关编译选项

|

||||

- `MNN_AVX512` 是否使用AVX512指令,需要gcc9以上版本编译

|

||||

- `MNN_OPENCL` 是否使用OpenCL后端,针对GPU设备

|

||||

- `MNN_METAL` 是否使用Metal后端,针对MacOS/iOSGPU设备

|

||||

- `MNN_VULKAN` 是否使用Vulkan后端,针对GPU设备

|

||||

- `MNN_CUDA` 是否使用CUDA后端,针对Nivida GPU设备

|

||||

- 其他编译选项可自行查看 CMakeLists.txt

|

||||

- 具体步骤

|

||||

### 具体步骤

|

||||

1. 准备工作 (可选,修改 MNN Schema 后需要)

|

||||

```bash

|

||||

cd /path/to/MNN

|

||||

|

|

@ -22,6 +22,15 @@

|

|||

```bash

|

||||

mkdir build && cd build && cmake .. && make -j8

|

||||

```

|

||||

### Mac M1 上编译

|

||||

- Mac M1 较为特殊的一点是作为过渡期间的芯片支持Arm/x64双架构,一般需要额外指定来获取需要的架构

|

||||

- 在 cmake 步骤增加 `-DCMAKE_OSX_ARCHITECTURES=arm64` 可以编译出 Arm 架构的库,对应地编译 x64 架构时加 `-DCMAKE_OSX_ARCHITECTURES=x86_64`:

|

||||

|

||||

```

|

||||

cd /path/to/MNN

|

||||

mkdir build && cd build && cmake .. -DCMAKE_OSX_ARCHITECTURES=arm64 && make -j8

|

||||

```

|

||||

|

||||

## Windows(非ARM架构)

|

||||

- 环境要求

|

||||

- Microsoft Visual Studio >= 2017

|

||||

|

|

@ -87,14 +96,23 @@

|

|||

mkdir build_64 && cd build_64 && ../build_64.sh

|

||||

```

|

||||

## iOS

|

||||

可基于脚本编译或者基于xcode工程编译

|

||||

|

||||

- 环境要求

|

||||

- xcode

|

||||

- cmake

|

||||

- 相关编译选项

|

||||

- `MNN_METAL` 是否使用Metal后端,Metal后端可以利用GPU加速

|

||||

- `MNN_COREML` 是否使用CoreML后端,CoreML后端可以利用ANE硬件加速

|

||||

- `MNN_ARM82` 是否支持fp16推理,开启该编译选项后,在precision设成Precision_Low时,会在支持的设备(ARMv8.2 及以上架构)上启用低精度(fp16)推理,减少内存占用,提升性能

|

||||

- 具体步骤

|

||||

- 在macOS下,用Xcode打开project/ios/MNN.xcodeproj,点击编译即可

|

||||

|

||||

- 基于 xcode 编译:用Xcode打开project/ios/MNN.xcodeproj,点击编译即可,工程中默认打开上述所有编译选项

|

||||

|

||||

- 基于脚本编译:运行脚本并开启`MNN_ARM82`选项

|

||||

```

|

||||

sh package_scripts/ios/buildiOS.sh "-DMNN_ARM82=true"

|

||||

```

|

||||

|

||||

## 其他平台交叉编译

|

||||

由于交叉编译的目标设备及厂商提供的编译环境类型众多,本文恕无法提供手把手教学。 以下是大致流程,请按照具体场景做相应修改。

|

||||

交叉编译大致上分为以下两个步骤,即获取交叉编译器以及配置CMake进行交叉编译。

|

||||

|

|

@ -137,3 +155,49 @@

|

|||

-DCMAKE_CXX_COMPILER=$cross_compile_toolchain/bin/aarch64-linux-gnu-g++

|

||||

make -j4

|

||||

```

|

||||

|

||||

## Web

|

||||

|

||||

- 可以把 MNN 源代码编译为 WebAssembly 以便在浏览器中使用

|

||||

|

||||

### 安装 emcc

|

||||

参考 https://emscripten.org/docs/getting_started/downloads.html ,安装完成后并激活,此时可使用 emcmake

|

||||

|

||||

### 编译(通用)

|

||||

- 使用 emcmake cmake 替代 cmake ,然后 make 即可:

|

||||

```

|

||||

mkdir build

|

||||

cd build

|

||||

emcmake cmake .. -DCMAKE_BUILD_TYPE=Release -DMNN_FORBID_MULTI_THREAD=ON -DMNN_USE_THREAD_POOL=OFF -DMNN_USE_SSE=OFF

|

||||

emmake make MNN -j16

|

||||

```

|

||||

|

||||

编译完成后产出 libMNN.a ,可在后续的 webassembly 程序中链接,链接时一般要添加 -s ALLOW_MEMORY_GROWTH=1 ,避免内存不足后 crash

|

||||

|

||||

### SIMD 支持

|

||||

|

||||

- 如果确认目标设备支持Web Simd ,在cmake时加上 -msimd128 -msse4.1 ,可以较大提升性能,eg:

|

||||

```

|

||||

mkdir build

|

||||

cd build

|

||||

emcmake cmake .. -DCMAKE_BUILD_TYPE=Release -DMNN_BUILD_TEST=true -DCMAKE_CXX_FLAGS="-msimd128 -msse4.1" -DMNN_FORBID_MULTI_THREAD=ON -DMNN_USE_THREAD_POOL=OFF -DMNN_USE_SSE=ON

|

||||

emmake make MNN -j16

|

||||

```

|

||||

|

||||

### 测试

|

||||

由于Web上文件系统不一致,建议只编译run_test.out运行,其他测试工具需要加上--preload-file {dir}

|

||||

|

||||

- 编译示例

|

||||

|

||||

```

|

||||

mkdir build

|

||||

cd build

|

||||

emcmake cmake .. -DCMAKE_BUILD_TYPE=Release -DMNN_BUILD_TEST=true -DCMAKE_CXX_FLAGS="-msimd128 -msse4.1 -s ALLOW_MEMORY_GROWTH=1" -DMNN_FORBID_MULTI_THREAD=ON -DMNN_USE_THREAD_POOL=OFF -DMNN_USE_SSE=ON

|

||||

emmake make -j16

|

||||

```

|

||||

|

||||

- 运行

|

||||

```

|

||||

node run_test.out.js speed/MatMulBConst //测试性能

|

||||

node run_test.out.js //测试功能

|

||||

```

|

||||

|

|

|

|||

|

|

@ -335,33 +335,22 @@ REGISTER_METAL_OP_CREATOR(MetalMyCustomOpCreator, OpType_MyCustomOp);

|

|||

重新运行一下 CMake ,或者手动在Xcode工程中新加文件

|

||||

|

||||

### 添加Vulkan实现

|

||||

1. 添加Shader

|

||||

在`source/backend/vulkan/execution/glsl`目录下添加具体的shader(*.comp)。若输入内存布局为`NC4HW4`,则按`image`实现,否则采用buffer实现。可以参考目录下已有实现。然后,执行`makeshader.py`脚本编译Shader。

|

||||

Vulkan后端当前包含两种张量存储类型:buffer与image。开发者可在编译时通过宏`MNN_VULKAN_IMAGE`自行选择需要的存储类型。当开发者需要为Vulkan后端添加算子时,亦需要考虑选择何种存储类型并在相应目录下进行开发。下以image类型为例,阐述为Vulkan后端添加算子的主要流程。

|

||||

|

||||

2. 实现类声明

|

||||

在目录`source/backend/vulkan/execution/`下添加`VulkanMyCustomOp.hpp`和`VulkanMyCustomOp.cpp`:

|

||||

```cpp

|

||||

class VulkanMyCustomOp : public VulkanBasicExecution {

|

||||

public:

|

||||

VulkanMyCustomOp(const Op* op, Backend* bn);

|

||||

virtual ~VulkanMyCustomOp();

|

||||

ErrorCode onEncode(const std::vector<Tensor*>& inputs,

|

||||

const std::vector<Tensor*>& outputs,

|

||||

const VulkanCommandPool::Buffer* cmdBuffer) override;

|

||||

private:

|

||||

// GPU Shader所需的参数

|

||||

std::shared_ptr<VulkanBuffer> mConstBuffer;

|

||||

// Pipeline

|

||||

const VulkanPipeline* mPipeline;

|

||||

// Layout Descriptor Set

|

||||

std::shared_ptr<VulkanPipeline::DescriptorSet> mDescriptorSet;

|

||||

};

|

||||

```

|

||||

|

||||

3. 实现

|

||||

实现函数`onEncode`,首先需要做内存布局检查:若为`NC4HW4`,则Shader用image实现,否则用buffer。执行完毕返回NO_ERROR。

|

||||

|

||||

4. 注册实现类

|

||||

1. 实现Execution

|

||||

- 执行脚本`source/backend/vulkan/image/compiler/VulkanCodeGen.py`,该脚本将向`source/backend/vulkan/image/execution`中添加`VulkanMyOp.hpp`与`VulkanMyOp.cpp`的模版代码

|

||||

- 实现构造函数

|

||||

- 从CPU中读取常量参数,并写入GPU中

|

||||

- 创建算子所需的pipeline

|

||||

- 确定要使用的shader以及Macro

|

||||

- set descriptorTypes,即确定shader中用到的显存对象的类型

|

||||

- 调用getPipeline接口

|

||||

- 实现onEncode

|

||||

- 显存资源申请并更新descriptorSet,将shader中需要读写的显存对象写入descriptorSet

|

||||

- 添加memoryBarrier

|

||||

- 把pipeline绑到cmdBuffer与descriptorSet

|

||||

- command dispatch

|

||||

- 注册算子并添加创建类

|

||||

```cpp

|

||||

class VulkanMyCustomOpCreator : public VulkanBackend::Creator {

|

||||

public:

|

||||

|

|

@ -377,6 +366,15 @@ static bool gResistor = []() {

|

|||

}();

|

||||

```

|

||||

|

||||

2. 实现shader及编译

|

||||

- 编写Compute Shader文件`myOp.comp`,添加至目录`source/backend/vulkan/image/execution/glsl`

|

||||

- 将算子中用到的宏加入`source/backend/vulkan/image/execution/glsl/macro.json`

|

||||

- 执行脚本`source/backend/vulkan/image/compiler/makeshader.py`,该脚本将编译`myOp.comp`,并更新`source/backend/vulkan/image/compiler/AllShader.cpp`、`source/backend/vulkan/image/shaders/AllShader.h`以及`source/backend/vulkan/image/compiler/VulkanShaderMap.cpp`

|

||||

> MNN Vulkan当前使用glslangValidator(glslang仓库地址:<https://github.com/KhronosGroup/glslang>,版本号:12.2.0,commit id:d1517d64cfca91f573af1bf7341dc3a5113349c0)编译所有的compute shader。开发者如需保持自行编译后得到的二进制编译结果与MNN仓库中现有的编译结果一致,需要确保环境中的glslang的版本与MNN所使用的一致。

|

||||

|

||||

|

||||

|

||||

|

||||

### 添加OpenCL实现

|

||||

1. 添加Kernel

|

||||

在`source/backend/opencl/execution/cl`目录添加具体的kernel(*.cl)。目前feature map均使用`image2d`实现。可以参考目录下已有实现。然后执行`opencl_codegen.py`来生成kernel映射。

|

||||

|

|

|

|||

15

docs/faq.md

15

docs/faq.md

|

|

@ -3,6 +3,8 @@

|

|||

- [模型转换后结果与其他框架不一致](faq.html#id8)

|

||||

- [compute shape error](faq.html#compute-shape-error-for-xxx)

|

||||

- [模型转换时有Error信息](faq.html#reshape-error)

|

||||

- [模型转换加上fp16没有性能提升](faq.html#fp16)

|

||||

- [如何开启动态量化](faq.html#weightquantbits)

|

||||

- [模型量化后为什么比浮点慢](faq.html#id14)

|

||||

- [输入输出的elementSize与实际有区别](faq.html#tensor-elementsize)

|

||||

- [MNN模型如何加密](faq.html#id18)

|

||||

|

|

@ -112,6 +114,14 @@ opConverter ==> MNN Converter NOT_SUPPORTED_OP: [ ANY_OP_NAME ]

|

|||

### 模型转换后与原框架结果不一致

|

||||

先使用MNN中的模型一致性验证脚本进行测试,确定不是调用方法或其他错误,[使用方法](./tools/convert.html#id3)

|

||||

|

||||

### 模型转换加上fp16后没有性能提升

|

||||

此功能只支持压缩模型数据,在运行时仍然先解压到float32运算。如果希望使用 fp16 加速,打开 `MNN_ARM82` 并在加载模型时设置 precision = low

|

||||

|

||||

### 模型转换加上weightQuantBits后如何进行加速

|

||||

可以通过动态量化功能,加载仅权重量化的模型,降低内存占用和提升性能

|

||||

1. 打开 `MNN_LOW_MEMORY` 编译宏编译 MNN (支持动态量化功能)

|

||||

2. 使用 mnn 模型时 memory 设成 low

|

||||

|

||||

## Pymnn

|

||||

### import MNN 出现 import numpy error

|

||||

临时解决方案:升级 numpy 版本到 1.20.0 或以上

|

||||

|

|

@ -169,10 +179,10 @@ const float* outputPtr = output->readMap<float>();

|

|||

|

||||

### Android 设备无法查看日志

|

||||

Android 系统有两类打印日志的方式: printf 和 logcat. 默认 MNN 的编译脚本使用 printf,这样方便在命令行中调试。集成到 App 上时,用 cmake -DMNN_USE_LOGCAT=ON 将打印日志的方式改成 logcat 即可用 adb logcat 查看

|

||||

###

|

||||

|

||||

### 如何增加 opencl so 地址?

|

||||

MNN opencl 后端默认采用 dlopen 的方式动态打开设备的 opencl 驱动,相应位置若找不到您设备上的驱动,请修改 **OpenCLWrapper.cpp**

|

||||

###

|

||||

|

||||

### TensorArray Op 与 Switch / Merge 控制流支持

|

||||

TensorArray 和控制流支持需要借助 MNN-Express ,

|

||||

请参考 demo/exec/transformerDemo.cpp 的接口使用

|

||||

|

|

@ -284,6 +294,7 @@ GPU 后端调用 copy 的时间包含两个部分

|

|||

- x64 + vnni 指令,量化计算有 sdot 指令,明显快于 FP32 ,编译 MNN 时需要开启 MNN_AVX512 以支持这个指令,一般相比 AVX512 的浮点运算快 30%

|

||||

- ARM v7a / ARMv8 :量化计算采用 int8 乘加到 int16,再双加到 int32 的方式,计算效率略快于浮点(一般 30% 左右提升)。

|

||||

- ARMv8.2 架构有 sdot 指令,但同时 FP32 相对之前架构发射数也提升了一倍,也支持了比 FP32 快一倍的 FP16 向量计算指令,MNN 会检查设备架构以开启 sdot / smmla ,理想情况下量化计算性能比 FP32 快1倍以上,比 FP16 快 20%。

|

||||

- ARMv8.6 架构有 smmla 指令,理想情况下量化计算性能比 FP32 快3倍以上,比 FP16 快1倍以上,比 BF16 快 20%。

|

||||

|

||||

## 其他问题

|

||||

### MNN模型如何加密

|

||||

|

|

|

|||

|

|

@ -58,7 +58,6 @@

|

|||

train/expr

|

||||

train/data

|

||||

train/optim

|

||||

train/quant

|

||||

train/finetune

|

||||

train/distl

|

||||

|

||||

|

|

@ -69,6 +68,7 @@

|

|||

|

||||

transformers/diffusion

|

||||

transformers/llm

|

||||

transformers/models

|

||||

|

||||

.. toctree::

|

||||

:maxdepth: 1

|

||||

|

|

@ -78,7 +78,6 @@

|

|||

tools/convert

|

||||

tools/test

|

||||

tools/benchmark

|

||||

tools/quant

|

||||

tools/compress

|

||||

tools/visual

|

||||

tools/python

|

||||

|

|

|

|||

|

|

@ -270,7 +270,16 @@ const std::map<std::string, Tensor*>& getSessionInputAll(const Session* session)

|

|||

|

||||

在只有一个输入tensor时,可以在调用`getSessionInput`时传入NULL以获取tensor。

|

||||

|

||||

### 拷贝数据

|

||||

### 【推荐】映射填充数据

|

||||

**映射输入Tensor的内存,部分后端可以免数据拷贝**

|

||||

```cpp

|

||||

auto input = interpreter->getSessionInput(session, NULL);

|

||||

void* host = input->map(MNN::Tensor::MAP_TENSOR_WRITE, input->getDimensionType());

|

||||

// fill host memory data

|

||||

input->unmap(MNN::Tensor::MAP_TENSOR_WRITE, input->getDimensionType(), host);

|

||||

```

|

||||

|

||||

### 【不推荐】拷贝填充数据

|

||||

NCHW示例,适用 ONNX / Caffe / Torchscripts 转换而来的模型:

|

||||

```cpp

|

||||

auto inputTensor = interpreter->getSessionInput(session, NULL);

|

||||

|

|

@ -293,7 +302,7 @@ delete nhwcTensor;

|

|||

通过这类拷贝数据的方式,用户只需要关注自己创建的tensor的数据布局,`copyFromHostTensor`会负责处理数据布局上的转换(如需)和后端间的数据拷贝(如需)。

|

||||

|

||||

|

||||

### 直接填充数据

|

||||

### 【不推荐】直接填充数据

|

||||

```cpp

|

||||

auto inputTensor = interpreter->getSessionInput(session, NULL);

|

||||

inputTensor->host<float>()[0] = 1.f;

|

||||

|

|

@ -549,8 +558,16 @@ const std::map<std::string, Tensor*>& getSessionOutputAll(const Session* session

|

|||

|

||||

**注意:当`Session`析构之后使用`getSessionOutput`获取的`Tensor`将不可用**

|

||||

|

||||

### 拷贝数据

|

||||

**不熟悉MNN源码的用户,必须使用这种方式获取输出!!!**

|

||||

### 【推荐】映射输出数据

|

||||

**映射输出Tensor的内存数据,部分后端可以免数据拷贝**

|

||||

```cpp

|

||||

auto outputTensor = net->getSessionOutput(session, NULL);

|

||||

void* host = outputTensor->map(MNN::Tensor::MAP_TENSOR_READ, outputTensor->getDimensionType());

|

||||

// use host memory by yourself

|

||||

outputTensor->unmap(MNN::Tensor::MAP_TENSOR_READ, outputTensor->getDimensionType(), host);

|

||||

```

|

||||

### 【不推荐】拷贝输出数据

|

||||

**采用纯内存拷贝的方式,拷贝需要花费时间**

|

||||

NCHW (适用于 Caffe / TorchScript / Onnx 转换而来的模型)示例:

|

||||

```cpp

|

||||

auto outputTensor = interpreter->getSessionOutput(session, NULL);

|

||||

|

|

@ -577,7 +594,7 @@ delete nhwcTensor;

|

|||

|

||||

|

||||

|

||||

### 直接读取数据

|

||||

### 【不推荐】直接读取数据

|

||||

**由于绝大多数用户都不熟悉MNN底层数据布局,所以不要使用这种方式!!!**

|

||||

```cpp

|

||||

auto outputTensor = interpreter->getSessionOutput(session, NULL);

|

||||

|

|

|

|||

|

|

@ -1,11 +1,13 @@

|

|||

# 模型压缩工具箱

|

||||

# 模型压缩 / 模型量化

|

||||

|

||||

## 介绍

|

||||

### 是什么?

|

||||

MNN模型压缩工具箱提供了包括低秩分解、剪枝、量化等模型压缩算法的实现,并且MNN进一步实现了其中一些需要软件特殊实现的算法(如稀疏计算和量化)的底层计算过程,因此,此工具箱需要配合MNN推理框架来使用。

|

||||

具体来说,MNN压缩工具箱包含两个组成部分:

|

||||

1. **MNN框架自身提供的压缩工具**(输入MNN模型,输出MNN模型)

|

||||

2. **mnncompress**(基于主流训练框架TF/Pytorch的模型压缩工具)。

|

||||

MNN模型压缩工具提供了包括低秩分解、剪枝、量化等模型压缩算法的实现,并且MNN进一步实现了其中一些需要软件特殊实现的算法(如稀疏计算和量化)的底层计算过程,因此,此工具箱需要配合MNN推理框架来使用。

|

||||

具体来说,MNN压缩工具/量化工具包含三个部分,使用复杂度逐步上升:

|

||||

1. **模型转换工具中的压缩功能**(只实现权值量化,在模型转换过程中增加参数即可实现)

|

||||

2. **离线量化工具**(实现权值量化及特征量化,需要少量测试数据)

|

||||

3. **mnncompress**(基于主流训练框架TF/Pytorch的模型压缩工具,需要训练数据和对应的训练框架环境)。

|

||||

|

||||

### 有什么?

|

||||

目前提供的能力如下表所示:

|

||||

|

||||

|

|

@ -26,64 +28,79 @@ MNN模型压缩工具箱提供了包括低秩分解、剪枝、量化等模型

|

|||

| 训练量化 | 将float卷积转换为int8卷积计算,需要进行训练,可提高量化模型精度,降低存储量到原始模型的四分之一,降低内存,加速计算(某些模型可能会比float模型慢,因为float的优化方法和int8不同) | LSQ,OAQ,WAQ |

|

||||

| 直接权值量化 | 仅将模型中的权值进行量化,计算时还原为float进行计算,因此仅减少模型存储量,计算速度和float相同,可以在模型转换时一键完成,8bit量化情况下,精度基本不变,模型大小减小到原来的1/4 | 对称量化,非对称量化 |

|

||||

| 训练权值量化 | 特点同直接权值量化,但通过mnncompress压缩算法插件实现,因而可以提供更低比特的权值量化,以减少更多的存储量,并提高权值量化之后模型的精度,例如4bit量化情况下,模型大小减小到原来的1/8 | 对称量化 |

|

||||

| FP16 | 将FP32计算转换为FP16计算,可在模型转换时一键完成,模型大小减小为原来的1/2,精度基本无损,并提高计算速度(需要硬件支持FP16计算) | - |

|

||||

| FP16 | 将FP32计算转换为FP16计算,可在模型转换时一键完成,模型大小减小为原来的1/2,精度基本无损 | - |

|

||||

|

||||

### 怎么用?

|

||||

1. 如果只想使用离线压缩方法,可以将模型转换为MNN模型之后使用对应的工具进行压缩。这类压缩算法不需要进行训练finetune,所以通常运行得很快。

|

||||

2. 如果离线压缩方法的精度不满足要求,且能够进行训练finetune的话,可以使用**mnncompress**中提供的压缩算法插件将原始模型进行压缩,得到压缩之后的模型和压缩信息描述文件,然后将这两个文件输入到MNN模型转换工具得到最终的MNN压缩模型。需要训练的压缩算法可以提供更好的精度,但需要一定的时间进行finetune训练,此finetune训练需要的时间一般比模型从0开始训练要少很多。

|

||||

3. 这些算法中有些是可以叠加使用的,以取得更好的压缩效果。推荐使用pipeline(**其中方框中的算法均为可选,叠加压缩算法若精度不好,可选择使用**):

|

||||

1. 使用模型转换工具中的压缩功能无需额外数据,只要在模型转换时加对应参数即可,开启动态量化功能后也可以对卷积等计算量大的算子实现量化加速。

|

||||

2. 使用离线量化可以使大部分算子支持量化加速,这个可以将模型转换为MNN模型之后使用离线量化工具进行压缩,需要少量测试数据,但不需要进行训练finetune,通常运行得很快。

|

||||

3. 如果离线压缩方法的精度不满足要求,且能够进行训练finetune的话,可以使用**mnncompress**中提供的压缩算法插件将原始模型进行压缩,得到压缩之后的模型和压缩信息描述文件,然后将这两个文件输入到MNN模型转换工具得到最终的MNN压缩模型。需要训练的压缩算法可以提供更好的精度,但需要一定的时间进行finetune训练,此finetune训练需要的时间一般比模型从0开始训练要少很多。

|

||||

4. 这些算法中有些是可以叠加使用的,以取得更好的压缩效果。推荐使用pipeline(**其中方框中的算法均为可选,叠加压缩算法若精度不好,可选择使用**):

|

||||

|

||||

|

||||

## MNN框架自身提供的压缩工具

|

||||

### 使用方法

|

||||

MNN框架压缩工具是基于离线量化工具和MNN转换工具来实现压缩功能的,这两个工具均提供c++版本和python版本,安装方式如下:

|

||||

## 使用模型转换工具的压缩功能

|

||||

|

||||

### 模型转换工具安装

|

||||

- c++工具安装

|

||||

|

||||

需要源码编译MNN转换工具 `MNNConvert` 和量化工具 `quantized.out`

|

||||

源码编译MNN转换工具 `MNNConvert`

|

||||

```bash

|

||||

cd build

|

||||

cmake .. -DMNN_BUILD_CONVERTER=ON -DMNN_BUILD_QUANTOOLS=ON

|

||||

cmake .. -DMNN_BUILD_CONVERTER=ON

|

||||

make -j8

|

||||

```

|

||||

- python工具安装

|

||||

```bash

|

||||

# 外部版本MNN,外网安装方式

|

||||

pip install MNN

|

||||

# 外部版本MNN,集团内安装方式

|

||||

pip install --index-url https://pypi.antfin-inc.com/simple/ -U MNN

|

||||

# 内部版本MNN

|

||||

pip install --index-url https://pypi.antfin-inc.com/simple/ -U MNN-Internal

|

||||

# 安装之后,命令行中将有如下工具:

|

||||

mnn:显示MNN命令行工具

|

||||

mnnconvert:转换器 MNNConvert 的预编译工具,功能同 MNNConvert

|

||||

mnnquant:量化工具 quantized.out 的预编译工具,功能同 quantized.out

|

||||

```

|

||||

### MNN离线量化工具

|

||||

#### 原理

|

||||

将float卷积转换为int8卷积进行计算(仅量化卷积,建议将FC转为1*1卷积实现),同时会通过MNN几何计算机制将量化信息在网络中进行传播,以支持尽可能多的算子的量化计算。模型大小减少为原始模型的1/4,并减少内存,提高推理速度(某些模型可能量化之后变慢,因为float的计算可以使用winograd、strassen等优化算法,而离线量化的int8计算并没有这些优化,如果要使用int8量化的特殊优化,如OAQ、WAQ等,需要使用mnncompress)。

|

||||

#### 单输入、图片输入模型的量化

|

||||

这类模型可以使用 `quantized.out`(或`mnnquant`)进行量化,使用文档在:[quantized.out](quant.md),[mnnquant.md](python.html#mnnquant)

|

||||

#### 通用模型的量化

|

||||

通用模型量化工具可以支持任意输入和任意输入类型的模型的量化,基于MNN python包,使用文档在:[MNNPythonOfflineQuant](https://github.com/alibaba/MNN/tree/master/tools/MNNPythonOfflineQuant)

|

||||

|

||||

**注意:**`calibration_dataset.py`中`__getitem__`返回为一个输入sample,其形状不应该包含batch维度,在量化时我们会根据工具命令行中传入的batch参数,stack出一个batch的数据,但我们默认batch维度在第一维,所以,如果你的某个输入的batch维不在第一维,你需要在你对应的输入之前加一个transpose。

|

||||

### MNN权值量化工具

|

||||

#### 原理

|

||||

仅将模型中卷积的float权值量化为int8存储,推理时反量化还原为float权值进行计算。因此,其推理速度和float模型一致,但是模型大小可以减小到原来的1/4,可以通过模型转换工具一键完成,比较方便。推荐float模型性能够用,仅需要减少模型大小的场景使用。

|

||||

#### 使用方法

|

||||

使用`MNNConvert`(c++)或者`mnnconvert`(python包中自带)进行转换,转换命令行中加上下述选项即可:

|

||||

### 权值量化

|

||||

- 仅将模型中卷积的float权值量化为int8存储,在不开启动态量化功能的情况下,推理时反量化还原为float权值进行计算。因此,其推理速度和float模型一致,但是模型大小可以减小到原来的1/4,可以通过模型转换工具一键完成,比较方便,推荐优先使用。

|

||||

- 使用`MNNConvert`(c++)或者`mnnconvert`(python包中自带)进行转换,转换命令行中加上下述选项即可:

|

||||

```bash

|

||||

--weightQuantBits 8 [--weightQuantAsymmetric](可选)

|

||||

--weightQuantBits 8 [--weightQuantAsymmetric](可选) [--weightQuantBlock 128](可选)

|

||||

```

|

||||

`--weightQuantAsymmetric` 选项是指使用非对称量化方法,精度要比默认的对称量化精度好一些。

|

||||

### MNN FP16压缩工具

|

||||

#### 原理

|

||||

将模型中FP32权值转换为FP16存储,并在支持的设备上开启FP16推理,可以获得推理加速,并且速度减少到原来的1/2。可以在模型转换时一键完成,使用方便。

|

||||

#### 使用方法

|

||||

使用`MNNConvert`(c++)或者`mnnconvert`(python包中自带)进行转换,转换命令行中加上下述选项即可:

|

||||

`--weightQuantBlock 128` 表示以128为单位进行量化,如不设置则以输入通道数为单位进行量化。如果牺牲一些存储大小来提升量化精度,可以增加这个设置,理论上越小精度越高,但建议不要低于32。

|

||||

- 动态量化

|

||||

可以通过如下方式打开MNN运行时的动态量化支持,使权值量化后的模型中卷积等核心算子使用量化计算,降低内存并提升性能

|

||||

1. 打开 MNN_LOW_MEMORY 编译宏编译 MNN (支持动态量化功能)

|

||||

2. 使用 mnn 模型时 memory mode 设成 low

|

||||

|

||||

### FP16压缩

|

||||

- 将模型中FP32权值转换为FP16存储,并在支持的设备上开启FP16推理,可以获得推理加速,并且速度减少到原来的1/2。可以在模型转换时一键完成,使用方便。

|

||||

- 使用`MNNConvert`(c++)或者`mnnconvert`(python包中自带)进行转换,转换命令行中加上下述选项即可:

|

||||

```bash

|

||||

--fp16

|

||||

```

|

||||

|

||||

## 离线量化工具

|

||||

### 离线量化工具安装

|

||||

- c++工具安装

|

||||

|

||||

需要源码编译量化工具 `quantized.out`

|

||||

```bash

|

||||

cd build

|

||||

cmake .. -DMNN_BUILD_QUANTOOLS=ON

|

||||

make -j8

|

||||

```

|

||||

- python工具安装

|

||||

```bash

|

||||

pip install MNN

|

||||

# 安装之后,命令行中将有如下工具:

|

||||

mnn:显示MNN命令行工具

|

||||

mnnconvert:转换器 MNNConvert 的预编译工具,功能同 MNNConvert

|

||||

mnnquant:量化工具 quantized.out 的预编译工具,功能同 quantized.out

|

||||

```

|

||||

|

||||

### 离线量化原理

|

||||

|

||||

将float卷积转换为int8卷积进行计算(仅量化卷积,建议将FC转为1*1卷积实现),同时会通过MNN几何计算机制将量化信息在网络中进行传播,以支持尽可能多的算子的量化计算。模型大小减少为原始模型的1/4,并减少内存,提高推理速度(某些模型可能量化之后变慢,因为float的计算可以使用winograd、strassen等优化算法,而离线量化的int8计算并没有这些优化,如果要使用int8量化的特殊优化,如OAQ、WAQ等,需要使用mnncompress)。

|

||||

可以使用 `quantized.out`(或`mnnquant`)进行量化,使用文档在:[quantized.out](quant.md),[mnnquant.md](python.html#mnnquant)

|

||||

|

||||

## mnncompress

|

||||

### 使用方法

|

||||

#### 安装

|

||||

|

|

|

|||

|

|

@ -31,7 +31,7 @@ Usage:

|

|||

--MNNModel arg 转换之后保存的MNN模型文件名, ex: *.mnn

|

||||

|

||||

--fp16 将conv/matmul/LSTM的float32参数保存为float16,

|

||||

模型将减小一半,精度基本无损

|

||||

模型将减小一半,精度基本无损,运行速度和float32模型一致

|

||||

|

||||

--bizCode arg MNN模型Flag, ex: MNN

|

||||

|

||||

|

|

@ -41,7 +41,7 @@ Usage:

|

|||

|

||||

--weightQuantBits arg arg=2~8,此功能仅对conv/matmul/LSTM的float32权值进行量化,

|

||||

仅优化模型大小,加载模型后会解码为float32,量化位宽可选2~8,

|

||||

运行速度和float32模型一致。8bit时精度基本无损,模型大小减小4倍

|

||||

不开启动态量化的情况下,运行速度和float32模型一致。8bit时精度基本无损,模型大小减小4倍

|

||||

default: 0,即不进行权值量化

|

||||

|

||||

--weightQuantAsymmetric 与weightQuantBits结合使用,决定是否用非对称量化,默认为`true`

|

||||

|

|

@ -77,7 +77,9 @@ Usage:

|

|||

--detectSparseSpeedUp arg

|

||||

可选值:{0, 1}, 默认为1, 会检测权重是否使用稀疏化加速

|

||||

|

||||

--saveExternalData 将权重,常量等数据存储在额外文件中,默认为`false`

|

||||

--saveExternalData 将权重,常量等数据存储在额外文件中,默认为0,也就是`false`

|

||||

|

||||

--useGeluApproximation 在进行Gelu算子合并时,使用Gelu的近似算法,默认为1 ,也就是`true`

|

||||

|

||||

```

|

||||

|

||||

|

|

|

|||

|

|

@ -1,9 +1,8 @@

|

|||

# 单输入模型离线量化工具

|

||||

# 离线量化工具(输入少量数据量化)

|

||||

`./quantized.out origin.mnn quan.mnn imageInputConfig.json`

|

||||

|

||||

MNN quantized.out工具已支持通用(任意输入个数、维度、类型)模型离线量化, 但这里的多输入模型仅仅支持非图片输入类模型。

|

||||

|

||||

MNN现已推出基于TensorFlow/Pytorch的模型压缩工具mnncompress,请查看[文档](https://mnn-docs.readthedocs.io/en/latest/tools/compress.html)选择使用

|

||||

|

||||

## 参数

|

||||

- 第一个参数为原始模型文件路径,即待量化的浮点模

|

||||

|

|

@ -31,7 +30,7 @@ MNN现已推出基于TensorFlow/Pytorch的模型压缩工具mnncompress,请查

|

|||

|--------------------|------|

|

||||

| KL | 使用KL散度进行特征量化系数的校正,一般需要100 ~ 1000张图片(若发现精度损失严重,可以适当增减样本数量,特别是检测/对齐等回归任务模型,样本建议适当减少) |

|

||||

| ADMM | 使用ADMM(Alternating Direction Method of Multipliers)方法进行特征量化系数的校正,一般需要一个batch的数据 |

|

||||

| EMA | 使用指数滑动平均来计算特征量化参数,这个方法会对特征进行非对称量化,精度可能比上面两种更好。这个方法也是[MNNPythonOfflineQuant](https://github.com/alibaba/MNN/tree/master/tools/MNNPythonOfflineQuant)的底层方法,建议使用这个方法量化时,保留你pb或onnx模型中的BatchNorm,并使用 --forTraining 将你的模型转到MNN,然后基于此带BatchNorm的模型使用EMA方法量化。另外,使用这个方法时batch size应设置为和训练时差不多最好。 |

|

||||

| EMA | 使用指数滑动平均来计算特征量化参数,这个方法会对特征进行非对称量化,精度可能比上面两种更好。使用这个方法时batch size应设置为和训练时差不多最好。|

|

||||

|

||||

| weight_quantize_method | 说明 |

|

||||

|--------------------|------|

|

||||

|

|

@ -39,10 +38,12 @@ MNN现已推出基于TensorFlow/Pytorch的模型压缩工具mnncompress,请查

|

|||

| ADMM | 使用ADMM方法进行权值量化 |

|

||||

|

||||

## 多输入模型的参数设置的特别说明(MNN现阶段仅支持输入数据类型是非图片的多输入模型)

|

||||

|

||||

| 需要特别指定的参数 | 设置值 |

|

||||

|--------------------|------|

|

||||

| input_type | `str`:输入数据的类型,"sequence" |

|

||||

| path | `str`:存放校正特征量化系数的输入数据目录 |,

|

||||

| path | `str`:存放校正特征量化系数的输入数据目录 |

|

||||

|

||||

例如在quant.json文件中 "path": "/home/data/inputs_dir/",你所构造的矫正数据集有两个,分别存放在input_0和input_1子目录下,即"/home/data/inputs_dir/input_0"和"/home/data/inputs_dir/input_1".由GetMNNInfo工具可以得到模型的输入输出名称,例如该模型的输入有三个:data0, data1, data2,输出有两个:out1, out2. 那么在input_0和input_1子目录下分别有六个文件:data0.txt, data1.txt, data2.txt, out1.txt, out2.txt, input.json. 其中的五个文件名要和模型的输入输出名对应,最后一个input.json文件则描述的是输入名和对应的shape内容:

|

||||

```json

|

||||

{

|

||||

|

|

|

|||

|

|

@ -32,7 +32,7 @@ Model Version: < 2.0.0

|

|||

- `runMask:int` 是否输出推理中间结果,0为不输出,1为只输出每个算子的输出结果({op_name}.txt);2为输出每个算子的输入(Input_{op_name}.txt)和输出({op_name}.txt)结果; 默认输出当前目录的output目录下(使用工具之前要自己建好output目录); 16为开启自动选择后端;32为针对Winograd算法开启内存优化模式,开启后会降低模型(如果含有Winograd Convolution算子)运行时的内存但可能会导致算子的性能损失。可选,默认为`0`

|

||||

- `forwardType:int` 执行推理的计算设备,有效值为:0(CPU)、1(Metal)、2(CUDA)、3(OpenCL)、6(OpenGL),7(Vulkan) ,9 (TensorRT),可选,默认为`0`

|

||||

- `numberThread:int` 线程数仅对CPU有效,可选,默认为`4`

|

||||

- `precision_memory:int` 测试精度与内存模式,precision_memory % 16 为精度,有效输入为:0(Normal), 1(High), 2(Low), 3(Low_BF16),可选,默认为`2` ; precision_memory / 16 为内存设置,默认为 0 (memory_normal) 。例如测试 memory 为 low (2) ,precision 为 1 (high) 时,设置 precision_memory = 9 (2 * 4 + 1)

|

||||

- `precision_memory:int` 测试精度与内存模式,precision_memory % 4 为精度,有效输入为:0(Normal), 1(High), 2(Low), 3(Low_BF16),可选,默认为`2` ; (precision_memory / 4) % 4 为内存设置,默认为 0 (memory_normal) 。例如测试 memory 为 low (2) ,precision 为 1 (high) 时,设置 precision_memory = 9 (2 * 4 + 1)

|

||||

- `inputSize:str` 输入tensor的大小,输入格式为:`1x3x224x224`,可选,默认使用模型默认输入

|

||||

|

||||

|

||||

|

|

@ -480,7 +480,7 @@ GPU 内存输入测试用例

|

|||

- `testmode:int` 默认为 0 ,测试输入GPU内存的类型,0 (OpenCL Buffer) 、 1(OpenGL Texture)

|

||||

- `forwardType:int` 执行推理的计算设备,有效值为:0(CPU)、1(Metal)、2(CUDA)、3(OpenCL)、6(OpenGL),7(Vulkan) ,9 (TensorRT),可选,默认为`0`

|

||||

- `numberThread:int` GPU的线程数,可选,默认为`1`

|

||||

- `precision_memory:int` 测试精度与内存模式,precision_memory % 16 为精度,有效输入为:0(Normal), 1(High), 2(Low), 3(Low_BF16),可选,默认为`2` ; precision_memory / 16 为内存设置,默认为 0 (memory_normal) 。例如测试 memory 为 2(low) ,precision 为 1 (high) 时,设置 precision_memory = 9 (2 * 4 + 1)

|

||||

- `precision_memory:int` 测试精度与内存模式,precision_memory % 4 为精度,有效输入为:0(Normal), 1(High), 2(Low), 3(Low_BF16),可选,默认为`0` ; (precision_memory / 4) % 4 为内存设置,默认为 0 (memory_normal) 。 (precision_memory / 16) % 4 为功耗设置,默认为0(power_normal)。例如测试 memory 为 2(low) ,precision 为 1 (high) 时,设置 precision_memory = 9 (2 * 4 + 1)

|

||||

|

||||

|

||||

## 在Android中使用测试工具

|

||||

|

|

|

|||

|

|

@ -1,100 +0,0 @@

|

|||

# 训练量化

|

||||

## 什么是训练量化

|

||||

与离线量化不同,训练量化需要在训练中模拟量化操作的影响,并通过训练使得模型学习并适应量化操作所带来的误差,从而提高量化的精度。因此训练量化也称为Quantization-aware Training(QAT),意指训练中已经意识到此模型将会转换成量化模型。

|

||||

|

||||

## 如何在MNN中使用训练量化

|

||||

已经通过其他训练框架如TensorFlow、Pytorch等训练得到一个float模型,此时可以通过先将此float模型通过MNNConverter转换为MNN统一的模型格式,然后使用MNN提供的离线量化工具直接量化得到一个全int8推理模型。如果此模型的精度不满足要求,则可以通过训练量化来提高量化模型的精度。

|

||||

|

||||

使用步骤:

|

||||

1. 首先通过其他训练框架训练得到原始float模型;

|

||||

2. 编译MNNConverter模型转换工具;

|

||||

3. 使用MNNConverter将float模型转成MNN统一格式模型,因为要进行再训练,建议保留BN,Dropout等训练过程中会使用到的算子,这可以通过MNNConverter的 --forTraining 选项实现;

|

||||

4. 参考MNN_ROOT/tools/train/source/demo/mobilenetV2Train.cpp 中的 MobilenetV2TrainQuant demo来实现训练量化的功能,下面以MobilenetV2的训练量化为例,来看一下如何读取并将模型转换成训练量化模型

|

||||

5. 观察准确率变化,代码保存下来的模型即为量化推理模型

|

||||

```cpp

|

||||

// mobilenetV2Train.cpp

|

||||

// 读取转换得到的MNN float模型

|

||||

auto varMap = Variable::loadMap(argv[1]);

|

||||

if (varMap.empty()) {

|

||||

MNN_ERROR("Can not load model %s\n", argv[1]);

|

||||

return 0;

|

||||

}

|

||||

// 指定量化比特数

|

||||

int bits = 8;

|

||||

if (argc > 6) {

|

||||

std::istringstream is(argv[6]);

|

||||

is >> bits;

|

||||

}

|

||||

if (1 > bits || bits > 8) {

|

||||

MNN_ERROR("bits must be 2-8, use 8 default\n");

|

||||

bits = 8;

|

||||

}

|

||||

// 获得模型的输入和输出

|

||||

auto inputOutputs = Variable::getInputAndOutput(varMap);

|

||||

auto inputs = Variable::mapToSequence(inputOutputs.first);

|

||||

auto outputs = Variable::mapToSequence(inputOutputs.second);

|

||||

|

||||

// 扫描整个模型,并将inference模型转换成可训练模型,此时得到的模型是可训练的float模型

|

||||

std::shared_ptr<Module> model(PipelineModule::extract(inputs, outputs, true));

|

||||

// 将上面得到的模型转换成训练量化模型,此处指定量化bit数

|

||||

PipelineModule::turnQuantize(model.get(), bits);

|

||||

// 进行训练,观察训练结果,保存得到的模型即是量化模型

|

||||

MobilenetV2Utils::train(model, 1001, 1, trainImagesFolder, trainImagesTxt, testImagesFolder, testImagesTxt);

|

||||

```

|

||||

## MNN训练量化原理

|

||||

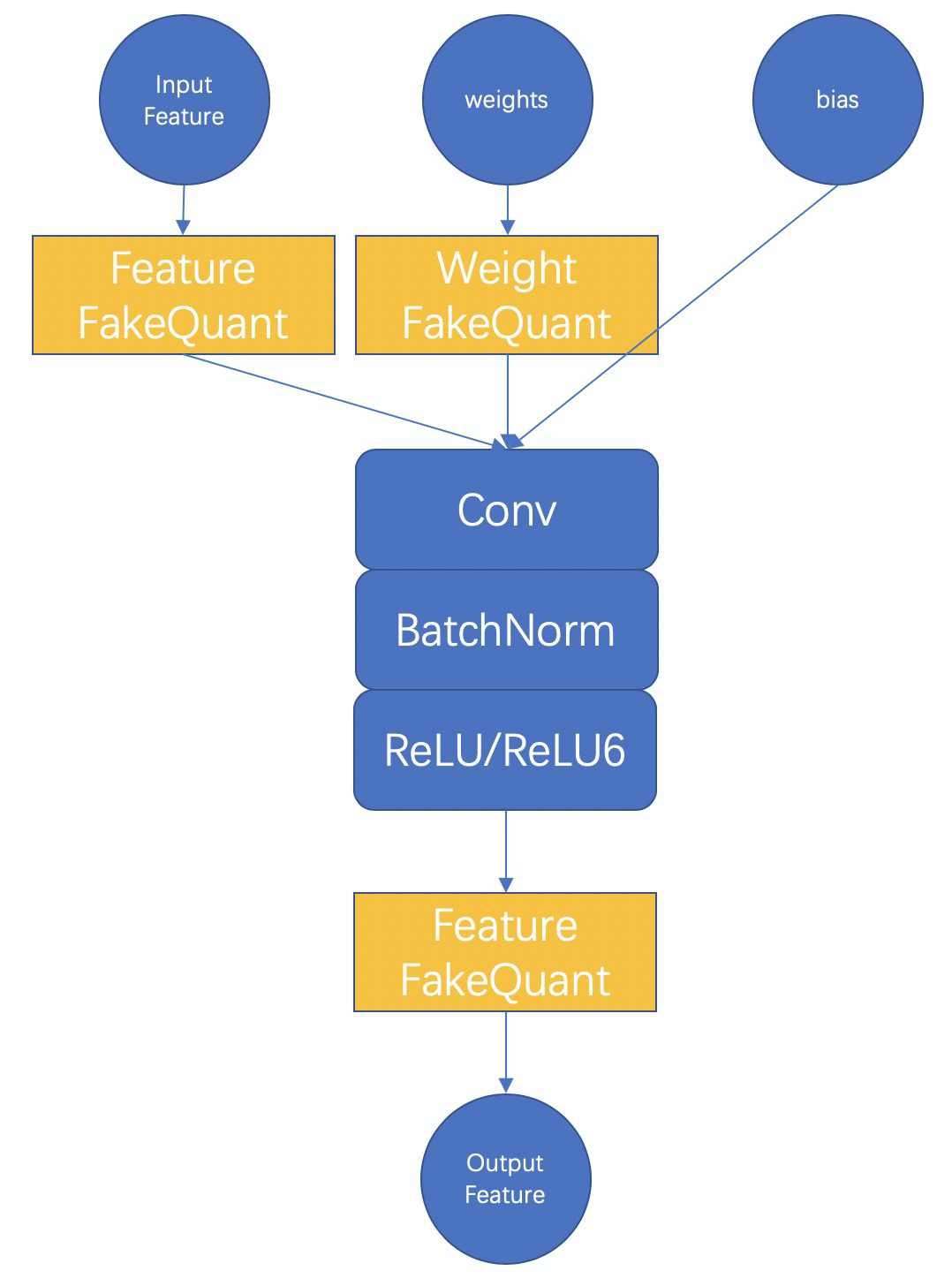

MNN训练量化的基本原理如下图所示

|

||||

|

||||

以int8量化为例,首先要理解全int8推理的整个过程,全int8推理,即feature要量化为int8,weight和bias也要量化为int8,输出结果可以是float或者是int8,视该卷积模块的后面一个op的情况而定。而训练量化的本质就是在训练的过程中去模拟量化操作的影响,借由训练来使得模型学习并适应这种影响,以此来提高最后量化模型的准确率。

|

||||

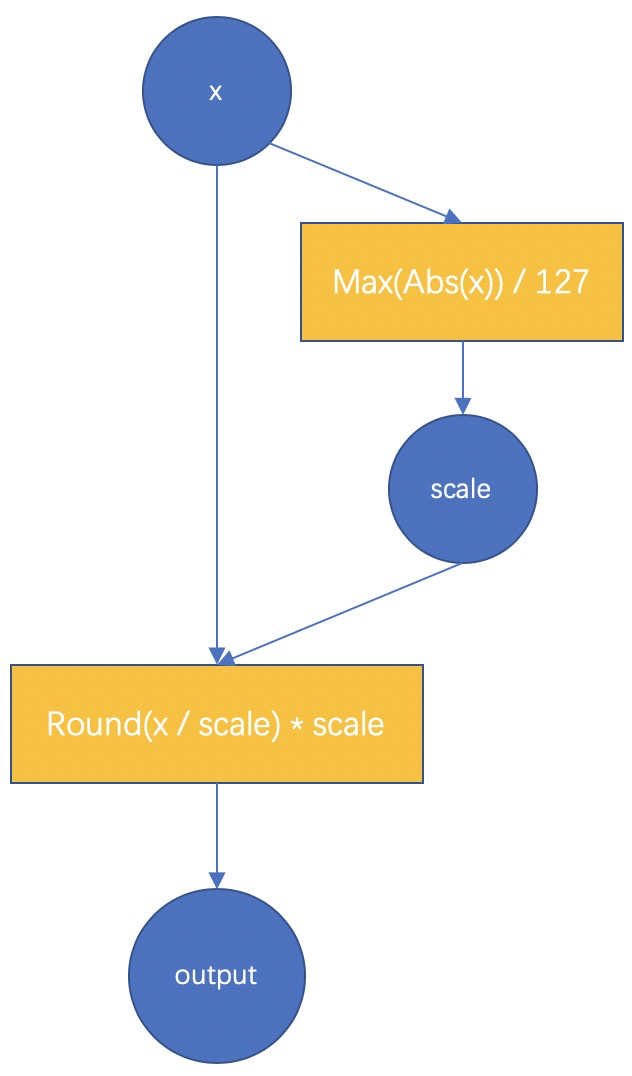

因此在两种 FakeQuant 模块中,我们的主要计算为

|

||||

|

||||

对于权值和特征的fake-quant基本都和上图一致,不一样的是对于特征由于其范围是随输入动态变化的,而最终int8模型中必须固定一个对于输入特征的scale值,所以,我们对每一此前向计算出来的scale进行了累积更新,例如使用滑动平均,或者直接取每一次的最大值。对于权值的scale,则没有进行平均,因为每一次更新之后的权值都是学习之后的较好的结果,没有状态保留。

|

||||

此外,对于特征,我们提供了分通道(PerChannel)或者不分通道(PerTensor)的scale统计方法,可根据效果选择使用。对于权值,我们则使用分通道的量化方法,效果较好。

|

||||

|

||||

上述是在训练中的training阶段的计算过程,在test阶段,我们会将BatchNorm合进权值,使用训练过程得到的特征scale和此时权值的scale(每次重新计算得到)对特征和权值进行量化,并真实调用MNN中的 _FloatToInt8 和 _Int8ToFloat 来进行推理,以保证测试得到的结果和最后转换得到的全int8推理模型的结果一致。

|

||||

|

||||

最后保存模型的时候会自动保存test阶段的模型,并去掉一些冗余的算子,所以直接保存出来即是全int8推理模型。

|

||||

|

||||

## 训练量化结果

|

||||

目前我们在Lenet,MobilenetV2,以及内部的一些人脸模型上进行了测试,均取得了不错的效果,下面给出MobilenetV2的一些详细数据

|

||||

|

||||

| | 准确率 / 模型大小 |

|

||||

| --- | --- |

|

||||

| 原始float模型 | 72.324% / 13M |

|

||||

| MNN训练量化int8模型 | 72.456% / 3.5M |

|

||||

| TF训练量化int8模型 | 71.1% / 3.5M (原始 71.8% / 13M) |

|

||||

|

||||

|

||||

上述数据是使用batchsize为32,训练100次迭代得到的,即仅使用到了3200张图片进行训练量化,在ImageNet验证集5万张图片上进行测试得到。可以看到int8量化模型的准确率甚至比float还要高一点,而模型大小下降了73%,同时还可以得到推理速度上的增益。

|

||||

|

||||

【注】此处使用到的float模型为TensorFlow官方提供的模型,但官方给出的准确率数据是71.8%,我们测出来比他们要高一点,原因是因为我们使用的预处理代码上有细微差别所致。

|

||||

|

||||

## 使用训练量化的一些建议

|

||||

|

||||

1. 模型转换时保留BatchNorm和Dropout等训练中会用到的算子,这些算子对训练量化也有帮助

|

||||

2. 要使用原始模型接近收敛阶段的训练参数,训练参数不对,将导致训练量化不稳定

|

||||

3. 学习率要调到比较小

|

||||

4. 我们仅对卷积层实现了训练量化,因此如果用MNN从零开始搭建模型,后期接训练量化,或者Finetune之后想继续训练量化,那么需要用卷积层来实现全连接层即可对全连接层也进行训练量化。示例代码如下

|

||||

```cpp

|

||||

// 用卷积层实现输入1280,输出为4的全连接层

|

||||

NN::ConvOption option;

|

||||

option.channel = {1280, 4};

|

||||

mLastConv = std::shared_ptr<Module>(NN::Conv(option));

|

||||

```

|

||||

|

||||

## 训练量化的配置选项

|

||||

详见 MNN_ROOT/tools/train/source/module/PipelineModule.hpp

|

||||

```cpp

|

||||

// 特征scale的计算方法

|

||||

enum FeatureScaleStatMethod {

|

||||

PerTensor = 0, // 对特征不分通道进行量化

|

||||

PerChannel = 1 // 对特征分通道进行量化,deprecated

|

||||

};

|

||||

// 特征scale的更新方法

|

||||

enum ScaleUpdateMethod {

|

||||

Maximum = 0, // 使用每一次计算得到的scale的最大值

|

||||

MovingAverage = 1 // 使用滑动平均来更新

|

||||

};

|

||||

// 指定训练量化的bit数,特征scale的计算方法,特征scale的更新方法,

|

||||

void toTrainQuant(const int bits = 8, NN::FeatureScaleStatMethod featureScaleStatMethod = NN::PerTensor,

|

||||

NN::ScaleUpdateMethod scaleUpdateMethod = NN::MovingAverage);

|

||||

```

|

||||

|

|

@ -2,9 +2,9 @@

|

|||

|

||||

## 模型支持与下载

|

||||

|

||||

1. runwayml/stable-diffusion-v1-5

|

||||

1. stable-diffusion-v1-5

|

||||

```

|

||||

https://huggingface.co/runwayml/stable-diffusion-v1-5/tree/main

|

||||

https://huggingface.co/stable-diffusion-v1-5/stable-diffusion-v1-5/tree/main

|

||||

```

|

||||

2. chilloutmix

|

||||

```

|

||||

|

|

|

|||

|

|

@ -0,0 +1,50 @@

|

|||

# 模型下载

|

||||

|

||||

## 大语言模型

|

||||

|

||||

| Model | ModelScope | Hugging Face |

|

||||

| -------- | ----------- | ------------ |

|

||||

| [Qwen-VL-Chat](https://modelscope.cn/models/qwen/Qwen-VL-Chat/summary) | [Q4_1](https://modelscope.cn/models/MNN/Qwen-VL-Chat-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/Qwen-VL-Chat-MNN) |

|

||||

| [Baichuan2-7B-Chat](https://modelscope.cn/models/baichuan-inc/Baichuan2-7B-Chat/summary) | [Q4_1](https://modelscope.cn/models/MNN/Baichuan2-7B-Chat-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/Baichuan2-7B-Chat-MNN) |

|

||||

| [bge-large-zh](https://modelscope.cn/models/AI-ModelScope/bge-large-zh/summary) | [Q4_1](https://modelscope.cn/models/MNN/bge-large-zh-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/bge-large-zh-MNN) |

|

||||

| [chatglm-6b](https://modelscope.cn/models/ZhipuAI/ChatGLM-6B/summary) | [Q4_1](https://modelscope.cn/models/MNN/chatglm-6b-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/chatglm-6b-MNN) |

|

||||

| [chatglm2-6b](https://modelscope.cn/models/ZhipuAI/chatglm2-6b/summary) | [Q4_1](https://modelscope.cn/models/MNN/chatglm2-6b-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/chatglm2-6b-MNN) |

|

||||

| [chatglm3-6b](https://modelscope.cn/models/ZhipuAI/chatglm3-6b/summary) | [Q4_1](https://modelscope.cn/models/MNN/chatglm3-6b-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/chatglm3-6b-MNN) |

|

||||

| [codegeex2-6b](https://modelscope.cn/models/MNN/codegeex2-6b-MNN/summary) | [Q4_1](https://modelscope.cn/models/MNN/codegeex2-6b-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/codegeex2-6b-MNN) |

|

||||

| [deepseek-llm-7b-chat](https://modelscope.cn/models/deepseek-ai/deepseek-llm-7b-chat/summary) | [Q4_1](https://modelscope.cn/models/MNN/deepseek-llm-7b-chat-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/deepseek-llm-7b-chat-MNN) |

|

||||

| [gemma-2-2b-it](https://modelscope.cn/models/llm-research/gemma-2-2b-it) | [Q4_1](https://modelscope.cn/models/MNN/gemma-2-2b-it-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/gemma-2-2b-it-MNN) |

|

||||

| [glm-4-9b-chat](https://modelscope.cn/models/ZhipuAI/glm-4-9b-chat/summary) | [Q4_1](https://modelscope.cn/models/MNN/glm-4-9b-chat-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/glm-4-9b-chat-MNN) |

|

||||

| [gte_sentence-embedding_multilingual-base](https://modelscope.cn/models/iic/gte_sentence-embedding_multilingual-base/summary) | [Q4_1](https://modelscope.cn/models/MNN/gte_sentence-embedding_multilingual-base-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/gte_sentence-embedding_multilingual-base-MNN) |

|

||||

| [internlm-chat-7b](https://modelscope.cn/models/AI-ModelScope/internlm-chat-7b/summary) | [Q4_1](https://modelscope.cn/models/MNN/internlm-chat-7b-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/internlm-chat-7b-MNN) |

|

||||

| [Llama-2-7b-chat](https://modelscope.cn/models/modelscope/Llama-2-7b-chat-ms/summary) | [Q4_1](https://modelscope.cn/models/MNN/Llama-2-7b-chat-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/Llama-2-7b-chat-MNN) |

|

||||

| [Llama-3-8B-Instruct](https://modelscope.cn/models/modelscope/Meta-Llama-3-8B-Instruct/summary) | [Q4_1](https://modelscope.cn/models/MNN/Llama-3-8B-Instruct-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/Llama-3-8B-Instruct-MNN) |

|

||||

| [Llama-3.2-1B-Instruct](https://modelscope.cn/models/LLM-Research/Llama-3.2-1B-Instruct/summary) | [Q4_1](https://modelscope.cn/models/MNN/Llama-3.2-1B-Instruct-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/Llama-3.2-1B-Instruct-MNN) |

|

||||

| [Llama-3.2-3B-Instruct](https://modelscope.cn/models/LLM-Research/Llama-3.2-3B-Instruct/summary) | [Q4_1](https://modelscope.cn/models/MNN/Llama-3.2-3B-Instruct-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/Llama-3.2-3B-Instruct-MNN) |

|

||||

| [OpenELM-1_1B-Instruct](https://huggingface.co/apple/OpenELM-1_1B-Instruct) | [Q4_1](https://modelscope.cn/models/MNN/OpenELM-1_1B-Instruct-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/OpenELM-1_1B-Instruct-MNN) |

|

||||

| [OpenELM-270M-Instruct](https://huggingface.co/apple/OpenELM-270M-Instruct) | [Q4_1](https://modelscope.cn/models/MNN/OpenELM-270M-Instruct-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/OpenELM-270M-Instruct-MNN) |

|

||||

| [OpenELM-3B-Instruct](https://huggingface.co/apple/OpenELM-3B-Instruct) | [Q8_1](https://modelscope.cn/models/MNN/OpenELM-3B-Instruct-MNN) | [Q8_1](https://huggingface.co/taobao-mnn/OpenELM-3B-Instruct-MNN) |

|

||||

| [OpenELM-450M-Instruct](https://huggingface.co/apple/OpenELM-450M-Instruct) | [Q4_1](https://modelscope.cn/models/MNN/OpenELM-450M-Instruct-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/OpenELM-450M-Instruct-MNN) |

|

||||

| [phi-2](https://modelscope.cn/models/mengzhao/phi-2/summary) | [Q4_1](https://modelscope.cn/models/MNN/phi-2-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/phi-2-MNN) |

|

||||

| [qwen/Qwen-1_8B-Chat](https://modelscope.cn/models/qwen/Qwen-1_8B-Chat/summary) | [Q4_1](https://modelscope.cn/models/MNN/Qwen-1_8B-Chat-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/Qwen-1_8B-Chat-MNN) |

|

||||

| [Qwen-7B-Chat](https://modelscope.cn/models/qwen/Qwen-7B-Chat/summary) | [Q4_1](https://modelscope.cn/models/MNN/Qwen-7B-Chat-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/Qwen-7B-Chat-MNN) |

|

||||

| [Qwen1.5-0.5B-Chat](https://modelscope.cn/models/qwen/Qwen1.5-0.5B-Chat/summary) | [Q4_1](https://modelscope.cn/models/MNN/Qwen1.5-0.5B-Chat-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/Qwen1.5-0.5B-Chat-MNN) |

|

||||

| [Qwen1.5-1.8B-Chat](https://modelscope.cn/models/qwen/Qwen1.5-1.8B-Chat/summary) | [Q4_1](https://modelscope.cn/models/MNN/Qwen1.5-1.8B-Chat-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/Qwen1.5-1.8B-Chat-MNN) |

|

||||

| [Qwen1.5-4B-Chat](https://modelscope.cn/models/qwen/Qwen1.5-4B-Chat/summary) | [Q4_1](https://modelscope.cn/models/MNN/Qwen1.5-4B-Chat-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/Qwen1.5-4B-Chat-MNN) |

|

||||

| [Qwen1.5-7B-Chat](https://modelscope.cn/models/qwen/Qwen1.5-7B-Chat/summary) | [Q4_1](https://modelscope.cn/models/MNN/Qwen1.5-7B-Chat-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/Qwen1.5-7B-Chat-MNN) |

|

||||

| [Qwen2-0.5B-Instruct](https://modelscope.cn/models/qwen/Qwen2-0.5B-Instruct/summary) | [Q4_1](https://modelscope.cn/models/MNN/Qwen2-0.5B-Instruct-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/Qwen2-0.5B-Instruct-MNN) |

|

||||

| [Qwen2-1.5B-Instruct](https://modelscope.cn/models/qwen/Qwen2-1.5B-Instruct/summary) | [Q4_1](https://modelscope.cn/models/MNN/Qwen2-1.5B-Instruct-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/Qwen2-1.5B-Instruct-MNN) |

|

||||

| [Qwen2-7B-Instruct](https://modelscope.cn/models/qwen/Qwen2-7B-Instruct/summary) | [Q4_1](https://modelscope.cn/models/MNN/Qwen2-7B-Instruct-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/Qwen2-7B-Instruct-MNN) |

|

||||

| [Qwen2-VL-2B-Instruct](https://modelscope.cn/models/qwen/Qwen2-VL-2B-Instruct/summary) | [Q4_1](https://modelscope.cn/models/MNN/Qwen2-VL-2B-Instruct-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/Qwen2-VL-2B-Instruct-MNN) |

|

||||

| [Qwen2-VL-7B-Instruct](https://modelscope.cn/models/qwen/Qwen2-VL-7B-Instruct/summary) | [Q4_1](https://modelscope.cn/models/MNN/Qwen2-VL-7B-Instruct-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/Qwen2-VL-7B-Instruct-MNN) |

|

||||

| [Qwen2.5-0.5B-Instruct](https://modelscope.cn/models/qwen/Qwen2.5-0.5B-Instruct/summary) | [Q4_1](https://modelscope.cn/models/MNN/Qwen2.5-0.5B-Instruct-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/Qwen2.5-0.5B-Instruct-MNN) |

|

||||

| [Qwen2.5-1.5B-Instruct](https://modelscope.cn/models/qwen/Qwen2.5-1.5B-Instruct/summary) | [Q4_1](https://modelscope.cn/models/MNN/Qwen2.5-1.5B-Instruct-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/Qwen2.5-1.5B-Instruct-MNN) |

|

||||

| [Qwen2.5-3B-Instruct](https://modelscope.cn/models/qwen/Qwen2.5-3B-Instruct/summary) | [Q4_1](https://modelscope.cn/models/MNN/Qwen2.5-3B-Instruct-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/Qwen2.5-3B-Instruct-MNN) |

|

||||

| [Qwen2.5-7B-Instruct](https://modelscope.cn/models/qwen/Qwen2.5-7B-Instruct/summary) | [Q4_1](https://modelscope.cn/models/MNN/Qwen2.5-7B-Instruct-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/Qwen2.5-7B-Instruct-MNN) |

|

||||

| [Qwen2.5-Coder-1.5B-Instruct](https://modelscope.cn/models/qwen/Qwen2.5-Coder-1.5B-Instruct/summary) | [Q4_1](https://modelscope.cn/models/MNN/Qwen2.5-Coder-1.5B-Instruct-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/Qwen2.5-Coder-1.5B-Instruct-MNN) |

|

||||

| [Qwen2.5-Coder-7B-Instruct](https://modelscope.cn/models/qwen/Qwen2.5-Coder-7B-Instruct/summary) | [Q4_1](https://modelscope.cn/models/MNN/Qwen2.5-Coder-7B-Instruct-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/Qwen2.5-Coder-7B-Instruct-MNN) |

|

||||

| [Qwen2.5-Math-1.5B-Instruct](https://modelscope.cn/models/qwen/Qwen2.5-Math-1.5B-Instruct/summary) | [Q4_1](https://modelscope.cn/models/MNN/Qwen2.5-Math-1.5B-Instruct-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/Qwen2.5-Math-1.5B-Instruct-MNN) |

|

||||

| [Qwen2.5-Math-7B-Instruct](https://modelscope.cn/models/qwen/Qwen2.5-Math-7B-Instruct/summary) | [Q4_1](https://modelscope.cn/models/MNN/Qwen2.5-Math-7B-Instruct-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/Qwen2.5-Math-7B-Instruct-MNN) |

|

||||

| [reader-lm-0.5b](https://huggingface.co/jinaai/reader-lm-0.5b) | [Q4_1](https://modelscope.cn/models/MNN/reader-lm-0.5b-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/reader-lm-0.5b-MNN) |

|

||||

| [reader-lm-1.5b](https://huggingface.co/jinaai/reader-lm-1.5b) | [Q4_1](https://modelscope.cn/models/MNN/reader-lm-1.5b-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/reader-lm-1.5b-MNN) |

|

||||

| [TinyLlama-1.1B-Chat-v1.0](https://modelscope.cn/models/AI-ModelScope/TinyLlama-1.1B-Chat-v1.0/summary) | [Q4_1](https://modelscope.cn/models/MNN/TinyLlama-1.1B-Chat-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/TinyLlama-1.1B-Chat-MNN) |

|

||||

| [Yi-6B-Chat](https://modelscope.cn/models/01ai/Yi-6B-Chat/summary) | [Q4_1](https://modelscope.cn/models/MNN/Yi-6B-Chat-MNN) | [Q4_1](https://huggingface.co/taobao-mnn/Yi-6B-Chat-MNN) |

|

||||

|

|

@ -41,6 +41,11 @@ void Executor::setGlobalExecutorConfig(MNNForwardType type, const BackendConfig&

|

|||

}

|

||||

MNN_ASSERT(nullptr != rt);

|

||||

mAttr->firstType = type;

|

||||

// Cache threadnumber and config

|

||||

mAttr->numThread = numberThread;

|

||||

mAttr->config = config;

|

||||

// Remove sharedContext because it's not used for create backend

|

||||

mAttr->config.sharedContext = nullptr;

|

||||

}

|

||||

|

||||

int Executor::getCurrentRuntimeStatus(RuntimeStatus statusEnum) {

|

||||

|

|

@ -219,6 +224,11 @@ void Executor::RuntimeManager::setMode(Interpreter::SessionMode mode) {

|

|||

}

|

||||

void Executor::RuntimeManager::setHint(Interpreter::HintMode mode, int value) {

|

||||

mInside->modes.setHint(mode, value);

|

||||

auto current = ExecutorScope::Current();

|

||||

auto rt = current->getRuntime();

|

||||

for (auto& iter : rt.first) {

|

||||

iter.second->setRuntimeHint(mInside->modes.runtimeHint);

|

||||

}

|

||||

}

|

||||

void Executor::RuntimeManager::setExternalPath(std::string path, int type) {

|

||||

mInside->modes.setExternalPath(path, type);

|

||||

|

|

|

|||

|

|

@ -91,6 +91,7 @@ bool VARP::fix(VARP::InputType type) const {

|

|||

newVARP->expr().first->inside()->mHoldBackend = pipelineInfo.first.cache.second;

|

||||

}

|

||||

Variable::replace(VARP(mContent), newVARP);

|

||||

inputTensor->wait(MNN::Tensor::MAP_TENSOR_READ, true);

|

||||

return true;

|

||||

}

|

||||

|

||||

|

|

|

|||

|

|

@ -25,6 +25,8 @@ struct RuntimeAttr {

|

|||

struct ExecutorAttr {

|

||||

std::shared_ptr<Backend> constantBackend;

|

||||

MNNForwardType firstType;

|

||||

int numThread = 1;

|

||||

BackendConfig config;

|

||||

std::string externalFile;

|

||||

};

|

||||

};

|

||||

|

|

|

|||

|

|

@ -13,6 +13,7 @@

|

|||

#include <MNN/expr/ExecutorScope.hpp>

|

||||

#include "MNN_generated.h"

|

||||

#include "core/TensorUtils.hpp"

|

||||

#include "core/OpCommonUtils.hpp"

|

||||

#include "core/Session.hpp"

|

||||

#include "core/MNNMemoryUtils.h"

|

||||

#include "core/Backend.hpp"

|

||||

|

|

@ -61,19 +62,7 @@ int Utils::convertFormat(Dimensionformat format) {

|

|||

}

|

||||

|

||||

DataType Utils::convertDataType(halide_type_t type) {

|

||||

if (type.code == halide_type_float) {

|

||||

return DataType_DT_FLOAT;

|

||||

}

|

||||

if (type.code == halide_type_uint && type.bits == 8) {

|

||||

return DataType_DT_UINT8;

|

||||

}

|

||||

if (type.code == halide_type_int && type.bits == 8) {

|

||||

return DataType_DT_INT8;

|

||||

}

|

||||

if (type.code == halide_type_int && type.bits == 32) {

|

||||

return DataType_DT_INT32;

|

||||

}

|

||||

return DataType_DT_INVALID;

|

||||

return OpCommonUtils::convertDataType(type);

|

||||

}

|

||||

halide_type_t Utils::revertDataType(DataType dataType) {

|

||||

CONVERT(DataType_DT_FLOAT, halide_type_of<float>(), dataType);

|

||||

|

|

|

|||

|

|

@ -32,8 +32,10 @@ static MNN::Express::Executor::RuntimeManager* _createDefaultRuntimeManager(cons

|

|||

sche_config.backendConfig = config->backend->config;

|

||||

} else {

|

||||

auto exe = ExecutorScope::Current();

|

||||

sche_config.type = exe->getAttr()->firstType;

|

||||

sche_config.numThread = 1;

|

||||

auto attr = exe->getAttr();

|

||||

sche_config.type = attr->firstType;

|

||||

sche_config.numThread = attr->numThread;

|

||||

sche_config.backendConfig = &attr->config;

|

||||

}

|

||||

return Executor::RuntimeManager::createRuntimeManager(sche_config);

|

||||

}

|

||||

|

|

|

|||

|

|

@ -20,9 +20,15 @@

|

|||

#endif

|

||||

|

||||

#ifdef MNN_USE_LOGCAT

|

||||

#if defined(__OHOS__)

|

||||

#include <hilog/log.h>

|

||||

#define MNN_ERROR(format, ...) {char logtmp[4096]; snprintf(logtmp, 4096, format, ##__VA_ARGS__); OH_LOG_Print(LOG_APP, LOG_ERROR, LOG_DOMAIN, "MNNJNI", (const char*)logtmp);}

|

||||

#define MNN_PRINT(format, ...) {char logtmp[4096]; snprintf(logtmp, 4096, format, ##__VA_ARGS__); OH_LOG_Print(LOG_APP, LOG_DEBUG, LOG_DOMAIN, "MNNJNI", (const char*)logtmp);}

|

||||

#else

|

||||

#include <android/log.h>

|

||||

#define MNN_ERROR(format, ...) __android_log_print(ANDROID_LOG_ERROR, "MNNJNI", format, ##__VA_ARGS__)

|

||||

#define MNN_PRINT(format, ...) __android_log_print(ANDROID_LOG_INFO, "MNNJNI", format, ##__VA_ARGS__)

|

||||

#endif

|

||||

#elif defined MNN_BUILD_FOR_IOS

|

||||

// on iOS, stderr prints to XCode debug area and syslog prints Console. You need both.

|

||||

#include <syslog.h>

|

||||

|

|

@ -67,8 +73,8 @@ MNN_ERROR("Check failed: %s ==> %s\n", #success, #log); \

|

|||

#endif

|

||||

#define STR_IMP(x) #x

|

||||

#define STR(x) STR_IMP(x)

|

||||

#define MNN_VERSION_MAJOR 2

|

||||

#define MNN_VERSION_MINOR 9

|

||||

#define MNN_VERSION_PATCH 6

|

||||

#define MNN_VERSION_MAJOR 3

|

||||

#define MNN_VERSION_MINOR 0

|

||||

#define MNN_VERSION_PATCH 0

|

||||

#define MNN_VERSION STR(MNN_VERSION_MAJOR) "." STR(MNN_VERSION_MINOR) "." STR(MNN_VERSION_PATCH)

|

||||

#endif /* MNNDefine_h */

|

||||

|

|

|

|||

|

|

@ -4,13 +4,12 @@ cmake ../../../ \

|

|||

-DCMAKE_BUILD_TYPE=Release \

|

||||

-DOHOS_ARCH="arm64-v8a" \

|

||||

-DOHOS_STL=c++_static \

|

||||

-DMNN_USE_LOGCAT=false \

|

||||

-DMNN_USE_LOGCAT=true \

|

||||

-DMNN_BUILD_BENCHMARK=ON \

|

||||

-DMNN_USE_SSE=OFF \

|

||||

-DMNN_SUPPORT_BF16=OFF \

|

||||

-DMNN_BUILD_TEST=ON \

|

||||

-DOHOS_PLATFORM_LEVEL=9 \

|

||||

-DMNN_BUILD_FOR_ANDROID_COMMAND=true \

|

||||

-DNATIVE_LIBRARY_OUTPUT=. -DNATIVE_INCLUDE_OUTPUT=. $1 $2 $3

|

||||

|

||||

make -j4

|

||||

|

|

|

|||

|

|

@ -1,5 +1,6 @@

|

|||

#!/bin/bash

|

||||

DIR=yanxing

|

||||

DIR=MNN

|

||||

hdc shell mkdir /data/local/tmp/MNN

|

||||

|

||||

make -j16

|

||||

hdc file send ./libMNN.so /data/local/tmp/$DIR/libMNN.so

|

||||

|

|

|

|||

|

|

@ -727,7 +727,7 @@

|

|||

952298B22B4D39050043978B /* MetalLoop.mm in Sources */ = {isa = PBXBuildFile; fileRef = 952298B12B4D39050043978B /* MetalLoop.mm */; };

|

||||

952298B42B4D39260043978B /* MetalArgMax.mm in Sources */ = {isa = PBXBuildFile; fileRef = 952298B32B4D39250043978B /* MetalArgMax.mm */; };

|

||||

952298B72B4D4CC80043978B /* CoreMLLayerNorm.cpp in Sources */ = {isa = PBXBuildFile; fileRef = 952298B52B4D4CC80043978B /* CoreMLLayerNorm.cpp */; };

|

||||

952298B82B4D4CC80043978B /* coreMLLayerNorm.hpp in Headers */ = {isa = PBXBuildFile; fileRef = 952298B62B4D4CC80043978B /* coreMLLayerNorm.hpp */; };

|

||||

952298B82B4D4CC80043978B /* CoreMLLayerNorm.hpp in Headers */ = {isa = PBXBuildFile; fileRef = 952298B62B4D4CC80043978B /* CoreMLLayerNorm.hpp */; };

|

||||

95278CE72B9F0999009E9B29 /* CPUDynamicQuant.hpp in Headers */ = {isa = PBXBuildFile; fileRef = 95278CE52B9F0999009E9B29 /* CPUDynamicQuant.hpp */; };

|

||||

95278CE82B9F0999009E9B29 /* CPUDynamicQuant.cpp in Sources */ = {isa = PBXBuildFile; fileRef = 95278CE62B9F0999009E9B29 /* CPUDynamicQuant.cpp */; };

|

||||

95278CEA2B9F09C0009E9B29 /* ShapeDynamicQuant.cpp in Sources */ = {isa = PBXBuildFile; fileRef = 95278CE92B9F09C0009E9B29 /* ShapeDynamicQuant.cpp */; };

|

||||

|

|

@ -796,6 +796,10 @@

|

|||

CEA49AA92AFD010900971CB7 /* MetalExecution.hpp in Headers */ = {isa = PBXBuildFile; fileRef = CEA49AA72AFD010900971CB7 /* MetalExecution.hpp */; };

|

||||

CEA82BDB2A15F8AD002CBC95 /* IdstConvolutionInt8.cpp in Sources */ = {isa = PBXBuildFile; fileRef = CEA82BD92A15F8AD002CBC95 /* IdstConvolutionInt8.cpp */; };

|

||||

CEA82BDC2A15F8AD002CBC95 /* IdstConvolutionInt8.hpp in Headers */ = {isa = PBXBuildFile; fileRef = CEA82BDA2A15F8AD002CBC95 /* IdstConvolutionInt8.hpp */; };

|

||||

CED81F8F2CC23C8A00666B48 /* CoreMLRelu6.cpp in Sources */ = {isa = PBXBuildFile; fileRef = CED81F8E2CC23C8A00666B48 /* CoreMLRelu6.cpp */; };

|

||||

CED81F902CC23C8A00666B48 /* CoreMLRelu6.hpp in Headers */ = {isa = PBXBuildFile; fileRef = CED81F8D2CC23C8A00666B48 /* CoreMLRelu6.hpp */; };

|

||||

CED81F932CC23FE800666B48 /* CoreMLMatMul.cpp in Sources */ = {isa = PBXBuildFile; fileRef = CED81F922CC23FE800666B48 /* CoreMLMatMul.cpp */; };

|

||||

CED81F942CC23FE800666B48 /* CoreMLMatMul.hpp in Headers */ = {isa = PBXBuildFile; fileRef = CED81F912CC23FE800666B48 /* CoreMLMatMul.hpp */; };

|

||||

CEDB20EB2846D07100AE9DC4 /* AppDelegate.m in Sources */ = {isa = PBXBuildFile; fileRef = CEDB20EA2846D07100AE9DC4 /* AppDelegate.m */; };

|

||||

CEDB20F42846D07100AE9DC4 /* Main.storyboard in Resources */ = {isa = PBXBuildFile; fileRef = CEDB20F22846D07100AE9DC4 /* Main.storyboard */; };

|

||||

CEDB20F62846D07200AE9DC4 /* Assets.xcassets in Resources */ = {isa = PBXBuildFile; fileRef = CEDB20F52846D07200AE9DC4 /* Assets.xcassets */; };

|

||||

|

|

@ -1580,7 +1584,7 @@

|

|||

952298B12B4D39050043978B /* MetalLoop.mm */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.cpp.objcpp; path = MetalLoop.mm; sourceTree = "<group>"; };

|

||||

952298B32B4D39250043978B /* MetalArgMax.mm */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.cpp.objcpp; path = MetalArgMax.mm; sourceTree = "<group>"; };

|

||||

952298B52B4D4CC80043978B /* CoreMLLayerNorm.cpp */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.cpp.cpp; path = CoreMLLayerNorm.cpp; sourceTree = "<group>"; };

|

||||

952298B62B4D4CC80043978B /* coreMLLayerNorm.hpp */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.cpp.h; path = coreMLLayerNorm.hpp; sourceTree = "<group>"; };

|

||||

952298B62B4D4CC80043978B /* CoreMLLayerNorm.hpp */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.cpp.h; path = CoreMLLayerNorm.hpp; sourceTree = "<group>"; };

|

||||

95278CE52B9F0999009E9B29 /* CPUDynamicQuant.hpp */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.cpp.h; path = CPUDynamicQuant.hpp; sourceTree = "<group>"; };

|

||||

95278CE62B9F0999009E9B29 /* CPUDynamicQuant.cpp */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.cpp.cpp; path = CPUDynamicQuant.cpp; sourceTree = "<group>"; };

|

||||

95278CE92B9F09C0009E9B29 /* ShapeDynamicQuant.cpp */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.cpp.cpp; path = ShapeDynamicQuant.cpp; sourceTree = "<group>"; };

|

||||

|

|

@ -1649,6 +1653,10 @@

|

|||

CEA49AA72AFD010900971CB7 /* MetalExecution.hpp */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.cpp.h; path = MetalExecution.hpp; sourceTree = "<group>"; };

|

||||

CEA82BD92A15F8AD002CBC95 /* IdstConvolutionInt8.cpp */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.cpp.cpp; path = IdstConvolutionInt8.cpp; sourceTree = "<group>"; };

|

||||

CEA82BDA2A15F8AD002CBC95 /* IdstConvolutionInt8.hpp */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.cpp.h; path = IdstConvolutionInt8.hpp; sourceTree = "<group>"; };

|

||||

CED81F8D2CC23C8A00666B48 /* CoreMLRelu6.hpp */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.cpp.h; path = CoreMLRelu6.hpp; sourceTree = "<group>"; };

|

||||

CED81F8E2CC23C8A00666B48 /* CoreMLRelu6.cpp */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.cpp.cpp; path = CoreMLRelu6.cpp; sourceTree = "<group>"; };

|

||||

CED81F912CC23FE800666B48 /* CoreMLMatMul.hpp */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.cpp.h; path = CoreMLMatMul.hpp; sourceTree = "<group>"; };

|

||||

CED81F922CC23FE800666B48 /* CoreMLMatMul.cpp */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.cpp.cpp; path = CoreMLMatMul.cpp; sourceTree = "<group>"; };

|

||||

CEDB20E72846D07100AE9DC4 /* demo.app */ = {isa = PBXFileReference; explicitFileType = wrapper.application; includeInIndex = 0; path = demo.app; sourceTree = BUILT_PRODUCTS_DIR; };

|

||||

CEDB20E92846D07100AE9DC4 /* AppDelegate.h */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.c.h; path = AppDelegate.h; sourceTree = "<group>"; };

|

||||

CEDB20EA2846D07100AE9DC4 /* AppDelegate.m */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.c.objc; path = AppDelegate.m; sourceTree = "<group>"; };

|

||||

|

|

@ -2364,8 +2372,12 @@

|

|||

4D9A933A26255BDA00F9B43C /* execution */ = {

|

||||

isa = PBXGroup;

|

||||

children = (

|

||||

CED81F912CC23FE800666B48 /* CoreMLMatMul.hpp */,

|

||||

CED81F922CC23FE800666B48 /* CoreMLMatMul.cpp */,

|

||||

CED81F8D2CC23C8A00666B48 /* CoreMLRelu6.hpp */,

|

||||

CED81F8E2CC23C8A00666B48 /* CoreMLRelu6.cpp */,

|

||||

952298B52B4D4CC80043978B /* CoreMLLayerNorm.cpp */,

|

||||